- Part One: System Overview

- Part Two: System Overview: Messages and Applications

- Part Three: Screen Scraping

- Part Four: YouTube Parsing

- Part Five: Linking the Video to the Game

- Part Six: Messaging Middleware

- Part Seven: The Console

- Part Eight: The Site

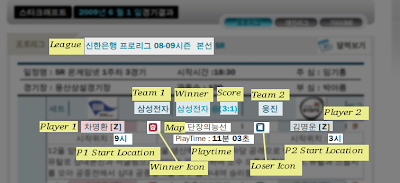

As I said in my last few posts, one of the things that needed to be done for this project was to gather the data on all schedules. I decided the best way to go about doing this was to scrape info from the KeSPA website. Here is a sample schedule page:

http://www.e-sports.or.kr/teams/player1.kea?m_code=team_24&pGame=1&pCode=1248

In case I haven't said it before: No, I do not speak Korean. Luckily, I didn't really need to. Some stuff could be determined from this page using only intuition (and the google translate add-on :)

In the page's html, the players and maps are anchor elements that links to a page with more info on the player and map, respectively. That url has a unique id for the players and maps, so I decided to use these id through my engine. The league name and teams are just plain characters, so I grab when I'm scraping.

After fetching the page using urllib2, I cut out some of the cruft of the page, and load the rest into BeautifulSoup. Testing these objects is done by saving actual examples I get from the site to a file, and using those files as the test. When I find a new style, I save that data to another file and write a test for it. Here's an example...

class ProleagueMatchScraperTests(BaseScraperTestCase):

"""

Original =

http://www.e-sports.or.kr/schedule/daily01_sche.kea?m_code=sche_12&gDate=20090603&gDvs=T&miniCal=2009-06-01

"""

TEST_FILE = "proleague/match.html"

SCRAPER = ProleagueMatchScraper

def test_teams(self):

self.assertEquals(self.results['team_one'], u'KTF')

self.assertEquals(self.results['team_two'], u'MBC게임')

self.assertEquals(self.results['winner'], None)

self.assertEquals(self.results['winner_score'], None)

self.assertEquals(self.results['loser_score'], None)

def test_game(self):

self.assertEquals(len(self.results['games']), 5)

game = self.results['games'][0]

self.assertEquals(game['player_one'], 988)

self.assertEquals(game['player_two'], 851)

self.assertEquals(game['map'], 1193)

self.assertEquals(game['winner'], None)

def test_ace_match(self):

game = self.results['games'][4]

self.assertEquals(game['player_one'], None)

self.assertEquals(game['player_two'], None)

self.assertEquals(game['map'], 1207)

self.assertEquals(game['winner'], None)

def test_stage_info(self):

self.assertEquals(self.results['stage_path'], ['Week 1', 'Day 5'])

Each test class has a TEST_FILE and SCRAPER attribute that are used by the BaseScraperTestCase to run the entire scrape in setUp. The TEST_FILE is the filename that has the html I pulled from the web site for that test, where the scraper is the object that will actually do the scraping. Thus, I can add new tests very easily.

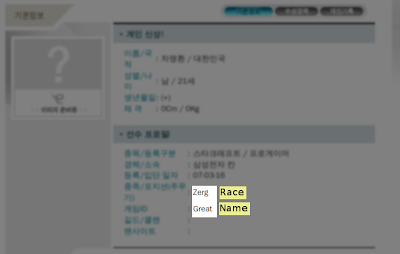

In addition to scraping schedules, I also need to scrape the player page for the players to find out stuff like their names and races. I'll use that as an example for what the actual scraper object looks like, because it's a bit simpler than the schedule scraper. The page looks as follows:

http://www.e-sports.or.kr/teams/player1.kea?m_code=team_24&pGame=1&pCode=1248

Yeah, the guys name is "Great". :P

class PlayerScraper(object):

NAME_PATH = [0, 1, 3, 1, 3, 1, 0, 15, 1, 0, 9, 7]

RACE_PATH = [0, 1, 3, 1, 3, 1, 0, 15, 1, 0, 7, 7]

def __init__(self, soup):

self.soup = soup

def scrape(self):

# If the name is not there, then we have a blank page, so it's not a

# legit player.

pre_name_elem = utils.dive_into_soup(self.soup, self.NAME_PATH)

if len(pre_name_elem.contents) == 0:

return None

name = unicode(pre_name_elem.contents[0]).strip()

pre_race_elem = utils.dive_into_soup(self.soup, self.RACE_PATH)

if len(pre_race_elem.contents) == 0:

race = None

else:

race = unicode(pre_race_elem.contents[0]).lower()

return {

'name' : name,

'race' : race,

'aliases' : []

}

The "soup" constructor argument is the BeautifulSoup object. I've found the easiest way to get at the data I want is to construct a "path" to the html element. The utils.dive_into_soup function looks like this:

def dive_into_soup(soup, content_navigation):

s = soup

for index, content in enumerate(content_navigation):

try:

s = s.contents[content]

except IndexError as e:

raise DiveError(e, content_navigation, index)

return s

So, basically, if the "path" is [0,3,2,4], then starting from the root element, I look at the 0th child element, then at that elements 3rd child element, etc., until I hit the bottom, then return the element I'm at. To make the creation of these "dive codes" easier, I've written a hacky little function to create them for me based on text that I specify.

Honestly, the idea of a "dive path" is kind of a hack. I'd much rather have the page, and be able to just say, "give me the element next to #player_name". Unfortunately, the entire kespa site uses table layouts, and all the ids and classes are pretty much for layout purposes as well, so this 'dive' approach seems to be a better option.

No comments:

Post a Comment